권호기사보기

| 기사명 | 저자명 | 페이지 | 원문 | 기사목차 |

|---|

| 대표형(전거형, Authority) | 생물정보 | 이형(異形, Variant) | 소속 | 직위 | 직업 | 활동분야 | 주기 | 서지 | |

|---|---|---|---|---|---|---|---|---|---|

| 연구/단체명을 입력해주세요. | |||||||||

|

|

|

|

|

|

* 주제를 선택하시면 검색 상세로 이동합니다.

Title Page

Abstract

초록

Preface

Contents

Chapter 1. Introduction 17

1.1. Spectral Discrepancy 17

1.1.1. Analysis of Spectral Discrepancy 18

1.1.2. Frequency-based Deepfake Detector 20

1.2. Previous Works for Spectral Discrepancy 20

1.2.1. Spatial Domain Approach 20

1.2.2. Frequency Domain Approach 21

1.3. Objective of This Study 21

Chapter 2. Spectrum Analysis of Generative Models 23

2.1. Frequency Analysis of GANs 23

2.2. Frequency Analysis of DMs 24

2.2.1. Review of Diffusion Model 25

2.2.2. Wiener Filter-Based Model 26

Chapter 3. Spectrm Translation for Refinement of Image Generation 29

3.1. Generative Adversarial Learning 29

3.2. Patch-Wise Contrastive Learning 30

3.3. STIG Framework 31

3.4. Auxiliary Regularizations 31

Chapter 4. Experiment 35

4.1. Experimental Setup 35

4.1.1. Training Details 35

4.1.2. Dataset Preparation and Evaluation Metrics 36

4.1.3. Comparison Methods 37

4.2. Results and Discussions 38

4.2.1. Frequency Domain Results 38

4.2.2. Improvement on Image Quality 39

Chapter 5. Ablation Study 43

5.1. Ablation Study of loss functions 43

5.2. STIG on Frequency-Based Detector 44

Chapter 6. Conclusion 47

Bibliography 48

Appendix A. Visual Examples 53

A.1. Image and Spectrum 53

A.2. Comparison of Averaged Spectrum 62

Figure 1.1. Spectral discrepancies between the real and generated image in various generative networks. 18

Figure 1.2. Examples of an upsampling result with respect to the various upsampling operation commonly used in the computer vision. 19

Figure 2.1. Estimation of an ideal low-pass filter by simulation with an example sinc function in the spatial domain. 24

Figure 2.2. Frequency response of the denoising filter for reverse process in diffusion models. (Note that t ∈ [0,1000] in this example.) 28

Figure 3.1. Illustration of the proposed framework STIG. 32

Figure 3.2. Example of the chessboard integration. 33

Figure 4.1. STIG examples on StarGAN 42

Figure 4.2. STIG examples on DDIM-Church 42

Figure 5.1. Comparison with a frequency domain method, SpectralGAN [1], for color tone. Examples are sampled from CycleGAN, StarGAN2, and Style-... 44

Figure A.1. Original generated and STIG-refined Image-Spectrum pairs of CycleGAN [2] benchmark. 54

Figure A.2. Original generated and STIG-refined Image-Spectrum pairs of StarGAN [3] benchmark. 55

Figure A.3. Original generated and STIG-refined Image-Spectrum pairs of StarGAN2 [4] benchmark. 56

Figure A.4. Original generated and STIG-refined Image-Spectrum pairs of StyleGAN [5] benchmark. 57

Figure A.5. Original generated and STIG-refined Image-Spectrum pairs of DDPM-Face [6] benchmark. 58

Figure A.6. Original generated and STIG-refined Image-Spectrum pairs of DDPM-Church [6] benchmark. 59

Figure A.7. Original generated and STIG-refined Image-Spectrum pairs of DDIM-Face [7] benchmark. 60

Figure A.8. Original generated and STIG-refined Image-Spectrum pairs of DDIM-Church [7] benchmark. 61

Figure A.9. Averaged magnitude of the spectrum for generative adversarial networks. 63

Figure A.10. Averaged magnitude of the spectrum for diffusion models. 64

*표시는 필수 입력사항입니다.

| 전화번호 |

|---|

| 기사명 | 저자명 | 페이지 | 원문 | 기사목차 |

|---|

| 번호 | 발행일자 | 권호명 | 제본정보 | 자료실 | 원문 | 신청 페이지 |

|---|

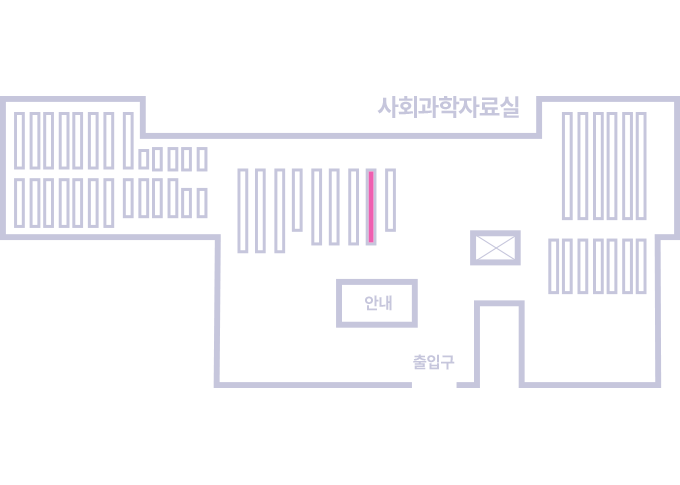

도서위치안내: / 서가번호:

우편복사 목록담기를 완료하였습니다.

*표시는 필수 입력사항입니다.

저장 되었습니다.