권호기사보기

| 기사명 | 저자명 | 페이지 | 원문 | 기사목차 |

|---|

| 대표형(전거형, Authority) | 생물정보 | 이형(異形, Variant) | 소속 | 직위 | 직업 | 활동분야 | 주기 | 서지 | |

|---|---|---|---|---|---|---|---|---|---|

| 연구/단체명을 입력해주세요. | |||||||||

|

|

|

|

|

|

* 주제를 선택하시면 검색 상세로 이동합니다.

Title Page

Contents

Abstract 9

Ⅰ. Introduction 11

Ⅱ. Background 16

2.1. Sensor-Based HAR 16

2.2. Convolutional Neural Network 17

2.3. Transformer model 18

2.4. Position Embedding 20

2.5. Long and Local dependencies 21

2.6. Related work on existing HAR models 22

Ⅲ. Human Activity Recognition model 24

3.1. Dataset description 24

3.1.1. KU-HAR dataset 24

3.1.2. UniMiB SHAR dataset 25

3.1.3. USC-HAD dataset 25

3.1.4. Dataset Preprocessing 27

3.2. Proposed model description 28

3.2.1. Input Definition 30

3.2.2. Convolutional Features Extractor Block 30

3.2.3. Multi-Head Self-Attention 31

3.2.4. Vector-based Relative Position Embedding 32

3.2.5. Feed-Forward Network 33

Ⅳ. Experiment & Result 34

4.1. Evaluation Metrics 34

4.2. Baseline Model 35

4.3. Experimental Setup Details 36

4.4. Experimental Result 38

Ⅴ. Discussion 41

5.1. Ablation Works of Improve Methods 42

5.1.1. Effect of Convolutional Feature Extract Block 42

5.1.2. Effect of Vector-based Relative Position Embedding 44

5.2. Ablation Works of Hyper-Parameters 47

5.2.1. Impact of Convolutional Layer Numbers 47

5.2.2. Impact of Convolutional Filter Numbers 48

5.2.3. Impact of Head Numbers 50

Ⅵ. Conclusion 51

References 53

국문 요약 64

〈Figure 2-1-1〉 Example of a HAR system based on sensors. 17

〈Figure 2-3-1〉 Transformer model architecture. 19

〈Figure 3-1-1〉 Activity class distribution of the datasets. (a) KU-HAR, (b) UniMiB SHAR, (c) USC-HAD 26

〈Figure 3-2-1〉 Overall Architecture of the Human Activity Classification Model. The right dashed box indicates the Convolutional Feature Extractor Block (CFEB).... 29

〈Figure 3-2-2〉 Self-attention modules with relative position embedding using vector parameters (vRPE-SA). Newly added parts are depicted in grey area. Firstly,... 33

〈Figure 4-4-1〉 Validation accuracy and loss curves for the two models on the USC-HAD, KU-HAR and UniMiB SHAR datasets. 38

〈Figure 5-1-1〉 Attention scores visualization of Baseline model(a) and enhanced versions(b). By using the Convolution feature extractor block module, the local... 43

〈Figure 5-2-1〉 Confusion Matrix. Baseline model(a), Baseline model with Initial Relative Position Embedding(b), and Baseline model with vRPE(c). 46

〈Figure 5-2-1〉 UniMiB SHAR Dataset's validation set loss for the impact of different number of convolutional filters on the proposed model. 49

*표시는 필수 입력사항입니다.

| 전화번호 |

|---|

| 기사명 | 저자명 | 페이지 | 원문 | 기사목차 |

|---|

| 번호 | 발행일자 | 권호명 | 제본정보 | 자료실 | 원문 | 신청 페이지 |

|---|

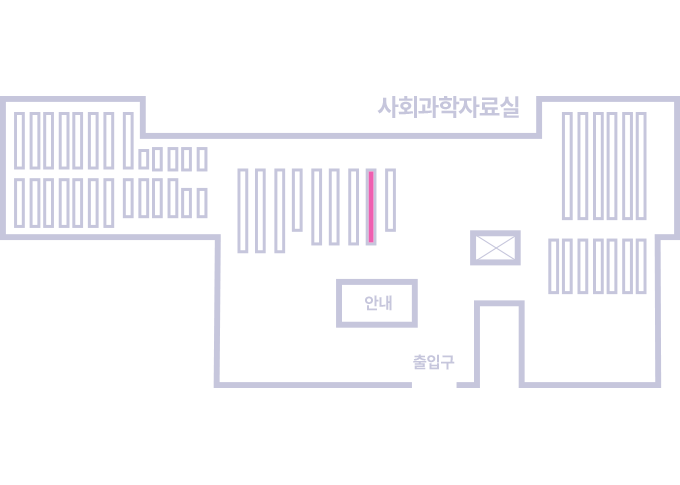

도서위치안내: / 서가번호:

우편복사 목록담기를 완료하였습니다.

*표시는 필수 입력사항입니다.

저장 되었습니다.