권호기사보기

| 기사명 | 저자명 | 페이지 | 원문 | 기사목차 |

|---|

결과 내 검색

동의어 포함

Title Page

Abstract

Contents

Chapter 1. Introduction 19

1.1. Human Activity Recognition (HAR) 19

1.2. Motivation 23

1.3. Related Work of HAR 24

1.3.1. Binary-based Silhouette Representation for HAR 24

1.3.2. Depth-based Silhouette Representation for HAR 25

1.3.3. Recognized Body Parts Representation for HAR 26

1.3.4. Random Forests in HAR 28

1.3.5. Hidden Markov Models in HAR 29

1.4. Proposed Features for HAR 30

1.5. Original Contributions 31

1.6. Thesis Organization 32

Chapter 2. Methodologies For HAR 34

2.1. Silhouettes Extraction Using Region of Interest (ROI) 35

2.2. R Transformation 36

2.2.1. Radon Transform 36

2.2.2. R Transform 39

2.3. Principal Component Analysis (PCA) 40

2.4. Linear Discriminant Analysis (LDA) 42

2.5. Random Forests (RFs) 43

2.6. Codebook Generation and Symbol Selection 45

2.7. Training and Recognition via HMM 46

Chapter 3. Depth Silhouette based Translation and Scaling Invariant Features for HAR 51

3.1. Silhouette Feature Preprocessing 52

3.2. Depth Feature Extraction Using R Transformation 53

3.2.1. Feature Extraction Using Radon Transform 53

3.2.2. Feature Extraction Using R Transform 54

3.2.3. Invariance of R Transform Features 54

3.2.4. R Transform of an Activity 55

3.2.5. Dimension Reduction of R Transformation Profiles Using PCA 56

3.3. LDA on PC-R Features 57

3.4. Codebook Generation and Symbol Selection 59

3.5. Activity Training and Recognition via HMM 59

3.6. Experimental Settings 61

3.7. Experimental Results 61

3.7.1. Convensional Features of PC and IC Depth Silhouettes 61

3.7.2. R Transform on Binary and Depth Silhouettes 63

3.7.3. Recognition Results using PC and IC Depth Silhouettes 66

3.7.4. Recognition Results using R Transform on Binary and Depth Silhouettes 67

3.8. Conclusion 68

Chapter 4. A HAR System Using Recognized Body Parts Features via Random Forests 69

4.1. Methodology for Depth Silhouette Features 70

4.1.1. Depth Image Preprocessing 71

4.1.2. Overview of the Proposed Recognized Body Parts System 71

4.2. Processing of Labeled DB Generation 71

4.2.1. Human Body Skeleton Fitting 71

4.2.2. Segmentation by Gaussian Contours 71

4.2.3. Color-Coded Segmentation of Depth Body Parts 73

4.3. Depth Silhouettes Features 74

4.4. Training Random Forests 74

4.5. Body Parts Labeling and Feature Generation 76

4.6. Codebook Generation and Symbol Selection 78

4.7. Activity Training and Recognition via HMM 78

4.8. Experimental Settings 80

4.9. Experimental Results 80

4.9.1. Body Parts Recognition via RFs 80

4.9.2. Recognition Results of Conventional vs Proposed HAR Techniques 82

4.10. Discussion 83

4.11. Conclusion 85

Chapter 5. Recognized Body Parts Features Using Synthetic Depth and Pre-labeled Body Parts Silhouettes for HAR 86

5.1. Synthetic Depth Silhouettes Data Processing 87

5.2. Generation of Synthetic DB: Depth and Pre-labeled Body Parts Silhouettes 88

5.2.1. 3D Human Body Modeling 88

5.2.2. Generation of Synthetic Depth and Pre-labeled Body Parts Silhouettes 89

5.2.3. Depth Silhouettes Features 90

5.2.4. Training Random Forests 90

5.3. Recognition of Body Parts and Feature Generation 91

5.4. Codebook Generation and Symbol Selection 93

5.5. Activity Training and Recognition via HMM 93

5.6. Experimental Settings 94

5.7. Experimental Results 95

5.8. Conclusion 96

Chapter 6. Real-Time Life Logging via Depth Imaging-based HAR and HMMs: An Application for Smart Environments 97

6.1. What is a Life-log HAR System 97

6.2. Methodology for Life-log HAR 99

6.3. Processes for the Proposed Life-log HAR System 99

6.4. Training the Life Logging System 100

6.4.1. Joint Points Body Parts Features 101

6.4.2. Joint Points for Feature Generation 102

6.4.3. Activity Training Using HMMs 102

6.5. Running of the Life Logging System 103

6.5.1. Recognition Using HMMs 103

6.5.2. Recorded Data as Life Logs 103

6.6. Experimental Settings 104

6.7. Experimental Results 104

6.7.1. Graphical User Interface of Life Logging System 105

6.7.2. Recognition Performance of Life Log System 108

6.7.3. Recognition Results of the Conventional and Proposed Life Log HAR Techniques 110

6.8. Conclusion 111

Chapter 7. Conclusion of the Proposed HAR Techniques 112

7.1. Conclusion 112

7.1.1. Pros and Cons of the Proposed Techniques 113

7.2. Future Work 114

7.2.1. 3D Human Body Model for Fast Real-Time HAR 114

7.2.2. Real Time Tracking System 115

7.2.3. Human Activity Prediction 115

7.2.4. Multiple Viewpoints 115

Bibliography 116

Appendix A. List of Publications 128

Figure 1.1: A general flow architecture of video-based human activity recognition system. 24

Figure 2.1: Overview flow of video-based human activity recognition system. 34

Figure 2.2: Process of Radon transform on depth silhouette. 37

Figure 2.3: Two depth silhouettes of different activities and their corresponding radon transform (sinusoidal curves). 38

Figure 2.4: R Transform of the depth walking silhouette which has been translated, scaled, and rotation by 45. 40

Figure 2.5: Top 100 eigenvalues corresponding to the eigenvectors. 41

Figure 2.6: A randomized decision forest. 44

Figure 2.7: Basic steps of (a) Codebook generation and (b) Symbol selection using in HMM. 46

Figure 2.8: Basic structure of probabilistic parameters of a HMM model. 47

Figure 2.9: An example of structure and transition probabilities (a) before and (b) after training HMM using cooking activity. 49

Figure 3.1: Proposed depth silhouette based R transformation human activity recognition system. 52

Figure 3.2: Image sequence of (a) binary silhouettes and (b) depth silhouettes of a walking activity. 53

Figure 3.3: Flow of R transformation along a single frame consists of (a) the Radon transform (b) showing two projections at two different angles (90,180), (c) Radon transform, and (d) ID R transform profile of a walking silhouette. 54

Figure 3.4: R transformation results of a walking silhouette (a) original (b) its translated one (c) and it's scaled respectively. The corresponding R profiles are all identical. 55

Figure 3.5: Overall flow of R transformation using a series of depth silhouettes of an activity including (a) depth silhouettes (b) Radon transform maps (c) R transform, and (d) A time-sequential R transform profiles respectively. 56

Figure 3.6: Plots of time-sequential R transform profiles from the depth silhouettes of our six human activities. 57

Figure 3.7: Codebook generation and symbol selection. 59

Figure 3.8: Running HMM structure and transition probabilities (a) before and (b) after training. 60

Figure 3.9: Five PCs from the depth silhouettes of all six typical human activities. 62

Figure 3.10: 3D plot of LDA on PC features having 1500 depth silhouettes of human activities. 62

Figure 3.11: Five ICs from the depth silhouettes of all six typical human activities. 62

Figure 3.12: 3D plot of LDA on IC features having 1500 depth silhouettes of human activities. 63

Figure 3.13: Three PC's containing global features of six typical human activities from the binary silhouettes. 64

Figure 3.14: 3D plot of LDA on PC-R features having 1500 binary silhouettes of human activities. 64

Figure 3.15: Three PC's containing global features of six typical human activities from the depth silhouettes. 65

Figure 3.16: 3D plot of LDA on PC-R features having 1500 depth silhouettes of human activities. 66

Figure 4.1: Overview of the proposed recognized body parts based HAR system: 70

Figure 4.2: (a) Depth silhouette (b) skeleton body model, and (c) fitted skeleton model with 15 joints. 72

Figure 4.3: (a) Human body model of parameterized Gaussian contours (b) fitted contour locations of a depth silhouette from a walking activity. 72

Figure 4.4: Pre-labeled body parts in different colors of sample human activities silhouettes. 73

Figure 4.5: Random forest trees containing nodes(black) and leaves(green). 74

Figure 4.6: In training random trees, depth silhouettes and its pre-labeled body parts silhouettes are utilized. 75

Figure 4.7: Flows of body parts recognition of depth silhouettes and centroids generation. 76

Figure 4.8: Sequences of (a) depth silhouettes, and (b) recognized body parts silhouettes of a walking activity. 77

Figure 4.9: Motion parameters features from the centriods of the recognized body parts: 78

Figure 4.10: Steps for codebook generation and symbol selection. 79

Figure 4.11: A right hand waving HMM (a) before and (b) after training showing transition probabilities. 79

Figure 4.12: Recognition accuracy with respect to the trees size. 81

Figure 4.13: Recognition accuracy with respect to the window size. 82

Figure 4.14: Recognition accuracy with respect to the number of features. 82

Figure 4.15: Comparison of recognition accuracy of various body parts. 83

Figure 5.1: Overall flow of the proposed recognized body parts based HAR system: 87

Figure 5.2: Human body model of (a) a biped skeleton model (b) a skin model (c) a fitted skeleton model with the skin model to create 3D motion with 15 joints. 88

Figure 5.3: (a) A 3D human body model (b) degree of freedoms (DOFs), and (c) joint points of a walking model. 89

Figure 5.4: Synthetic depth and body parts labeled depth silhouette. 89

Figure 5.5: Training RFs using the synthetic DB. 91

Figure 5.6: Body parts recognition of (a) synthetic and (b) real data of depth silhouettes and centroids generation. 92

Figure 5.7: Motion parameters features from the centroids of the recognized body parts: 93

Figure 5.8: Codebook Generation and Symbol Selection. 94

Figure 5.9: Both hand waving HMM after training showing transition probabilities. 94

Figure 6.1: Overall flow of the proposed life logging system: 100

Figure 6.2: Depth silhouettes of random activities of smart environment used in life-log HAR system. 101

Figure 6.3: Skeleton body model fitting based on depth silhouettes of different activities including walking, hand clapping and rushing for life-log HAR system. 101

Figure 6.4: Joints point body parts contains (a) depth silhouette, (b) skeleton body part labeling, and (c) skeleton model containing 15 joints. 101

Figure 6.5: Magnitude features from the joint points body parts of different life-log activities of various smart environments such as (a) smart home including walking and hand clapping, (b) smart office contains rushing and working at computer and (c) smart hospital contains taking medicine... 102

Figure 6.6: Directional features from the joint points body parts of different life-log activities of various smart environments. 103

Figure 6.7: Training data collection interface of our developed system containing (a) RGB images (b) depth maps having background (c) depth silhouettes after background subtraction (d) skeleton model and (e) joint points identification of specific skeleton. 106

Figure 6.8: Feature generation interface of our system: 106

Figure 6.9: HMM training interface. 107

Figure 6.10: Life logging interface which utilizes trained HMMs and generates life logs as shown at the bottom. 108

Figure 6.11: Real time recognition performance of life log system of smart home activities using LDA on PC feature approach. 109

Recent advancements in information technologies have made human activity recognition (HAR) realisable for practical applications such as personal lifecare and healthcare services. Although the conventional HAR utilizes RGB imaging devices which mainly based on binary silhouettes. In general, binary silhouettes are most commonly applied for human activity recognition. However, binary silhouettes produce ambiguity due to a lack of information in the limited pixel values. Thus, it is not contributed much for efficient human activity recognition. For better feature representation, depth silhouettes for human activity have been suggested in this work where the body parts are differentiated by means of different intensity values. In depth silhouettes, the body pixels are distributed based on the distance to the camera and represent human body better than binary silhouettes. Thus, the binary silhouettes can be replaced by the depth ones to be used with HMM to achieve better HAR performance.

Therefore, in this thesis, we present a novel depth video-based translation and scaling invariant human activity recognition (HAR) system utilizing R transformation of depth silhouettes. To perform HAR in indoor settings, an invariant HAR method is critical to freely perform activities anywhere in a camera view without translation and scaling problems of human body silhouettes. We obtain such invariant features via R transformation on depth silhouettes. Furthermore, in R transformation, 2D feature maps are computed first through Radon transform of each depth silhouette followed by computing 1D feature profile through R transform to get the translation and scaling invariant features. Then, we apply Principle Component Analysis (PCA) for dimension reduction and Linear Discriminant Analysis (LDA) to make the features more prominent, compact and robust. Finally, Hidden Markov Models (HMMs) are used to train and recognize different human activities. Thus, depth silhouette sequence provides useful features to be used with HMMs for better HAR than binary silhouettes-based approaches as well as conventional PC and IC-based depth silhouettes approaches.

However, the depth body silhouettes provide better human body representations in the values of depth, it is still not possible to differentiate various body parts. Thus, to strengthen depth information contents more, we have to identify each body part from a human depth body silhouette. Therefore, a body part recognition technology is in need. Specific human body parts of depth silhouettes of various human activities are recognized using trained random forests (RFs). Then a centroid of each body part gets computed, resulting in a set of 23 centroids per each depth silhouette. From the spatiotemporal information of these centroids, motion parameters are computed, producing a set of magnitude and directional angle features. Finally, using these features, HMMs are trained to recognize various human activities. Experiments are performed both on real-data (depth silhouettes) as well as synthetic depth silhouettes to improved HAR.

It is to be noticed that researches working in the field of HAR need proper platform to train, model and test their activities and used it in research works of smart services for smart homes and more. Therefore, a real-time life logging system is designed that logs daily human activities. In this work, we present a real-time life logging system via depth imaging-based human activity recognition. Our system is composed of two key processes: one is training of the life logging system, and the other running the trained life-logging system to record life logs. The training system includes the following functions: (i) data collection from a depth camera, (ii) extraction of body joint points from each depth silhouette, (iii) feature generation from the body joint points, and finally (iv) training of the activity recognizer (i.e., Hidden Markov Models). After training, one can run the trained system which recognizes learned activities and stores life log information for future reference. Our life logging system operates in real-time and the generated life log information gets stored in a daily log database. We believe that our developed life logging system should be beneficial in service applications for smart environments.*표시는 필수 입력사항입니다.

| 전화번호 |

|---|

| 기사명 | 저자명 | 페이지 | 원문 | 기사목차 |

|---|

| 번호 | 발행일자 | 권호명 | 제본정보 | 자료실 | 원문 | 신청 페이지 |

|---|

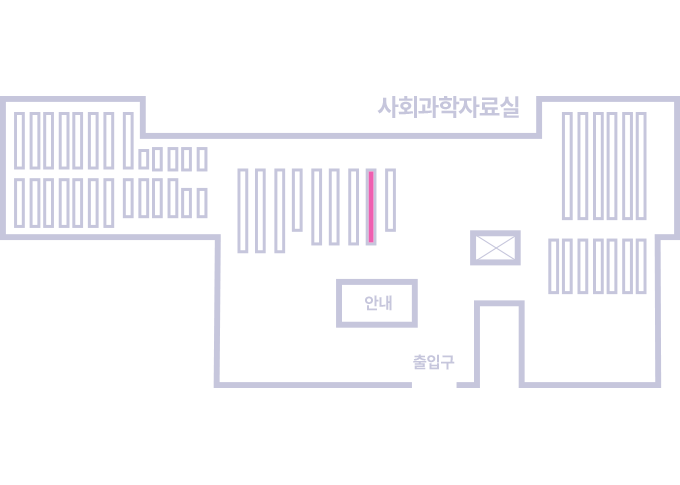

도서위치안내: / 서가번호:

우편복사 목록담기를 완료하였습니다.

*표시는 필수 입력사항입니다.

저장 되었습니다.